SEO Measurement Mistakes Part 3: Crawling & Indexation Metrics

This article is part of a series:

- In part 1, we discussed common mistakes regarding top-level SEO KPIs.

- In part 2, we looked at common mistakes made when analyzing SEO metrics in Google Analytics.

- The final article in this series is on link metrics.

Today, I want to cover 10 critical errors in analyzing and monitoring crawling and indexation metrics.

1. Not Monitoring Indexation

In SEO 101, we learn that the search engines build an index that contains all pages which might be eligible for display in the search engine results pages (SERPs). For a given page to get any traffic via search engines, it must be in the index, so it makes sense to make sure that the pages you want to get traffic to are indexed.

Further, it makes sense to ensure that pages you don’t want indexed are not indexed. Naturally, a common mistake we see is total failure to monitor indexation at all.

Monitoring indexation helps discover two important issues – under-indexation and over-indexation.

Under-indexation is when pages you want traffic to are not getting indexed. This especially occurs if the pages are new or infrequently linked to (such as pages deep inside your site) and means you could be missing out on traffic.

Over-indexation is when pages you don’t want indexed are indexed, leading to private pages being public or duplicate content.

If more pages are indexed then you the number of pages you want to be search engine landing pages, then you may have index bloat. As in duplicate content, if your site has index bloat, the consequent dissipation of link juice and constrained crawl budget can have a significant impact on SEO traffic.

2. Not Monitoring the Crawl

Another thing we learn in SEO 101 is that before the search engines can even index a page, they must crawl the page. There are basically three kinds of crawl issues.

Crawling too few URLs: When pages you want to be crawled are not crawled frequently enough or at all, eliminating them or their newest content from eligibility for indexation and inclusion in the SERPs. This can occur if you have accidentally prevented the page from being crawled OR if the search engines are over-crawling other pages.

Crawling too many URLs: One of the reasons important pages won’t get crawled as frequently as you want (or at all) is if the search engines are too busy crawling pages that are unimportant or not crawl-worthy.

Crawl errors: When the search engines have a problem downloading a specific URL.

There’s things you can do to mitigate these issues (which often reveal deeper problems with your site), but its a common mistake to not monitor for these issues at all. Ensuring the search engines are crawling the content that matters in a timely manner can have a solid impact on SEO. Later on, we’ll get into how to uncover these issues using Webmaster Tools.

3. Using Site:search

The “site: search” is a common way people check on the number of pages a search engine indexed for their site. Unfortunately, its notoriously inaccurate.

SEO legend Rand Fishkin does a great job explaining how terrible this data is in Indexation for SEO: Real Numbers in 5 Easy Steps. There’s simply better metrics than site search.

4. Not Paying Attention to Which Pages Get Traffic

In that article, Rand explains the importance of looking at the number of pages receiving organic search engine traffic. After all, that’s what all this obsession about content being crawl-able, pages being indexed, and index bloat is all about – making sure that pages that deserve search engine traffic have the opportunity to get it, and making sure that pages that don’t deserve the opportunity aren’t getting traffic. That’s actionable data.

# of page receiving at least one visit from search engine x is different from # of pages indexed because:

- a page is not guaranteed visibility just because it is in the index, and

- there are different levels of indexation.

Again, Rand has already illustrated that last point well:

With so many levels of indexation, it’s easy to see how a URL can be technically indexed but have little to no visibility in the SERPs.

Additionally, this graphic makes it clear that indexation is too complex to take a single metric on # of pages indexed at face value; instead, you’ll want to keep an eye over multiple data points regularly.

5. Not using Webmaster Tools

However, it’s a fairly common occurrence that the # of pages you’d like to receive search engine traffic does not match the number of pages actually receiving search engine traffic.

When that happens, knowing how many pages are indexed gets you a step closer to solving that riddle.

Even if the number of pages receiving traffic is the number you want to see, looking at the number of pages indexed can help you:

- See trends in indexation before it is reflected in traffic.

- Nip index bloat or duplicate content issues in the bud.

- Diagnose issues getting new content crawled faster.

The places to look for this data are:

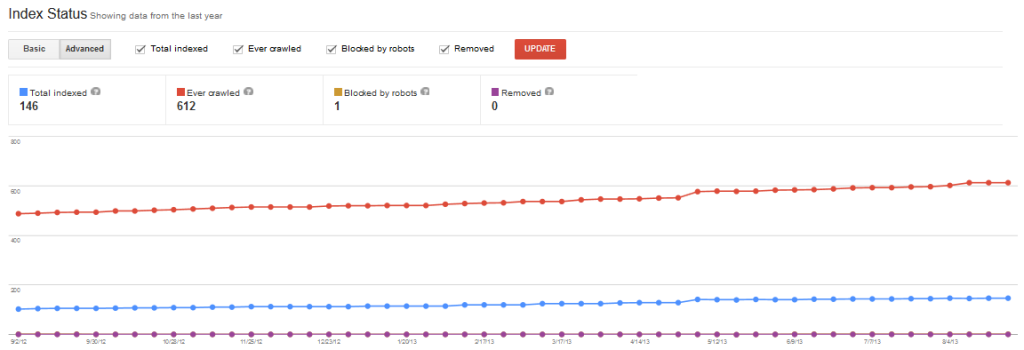

Google Webmaster Tools Index Status Report

and

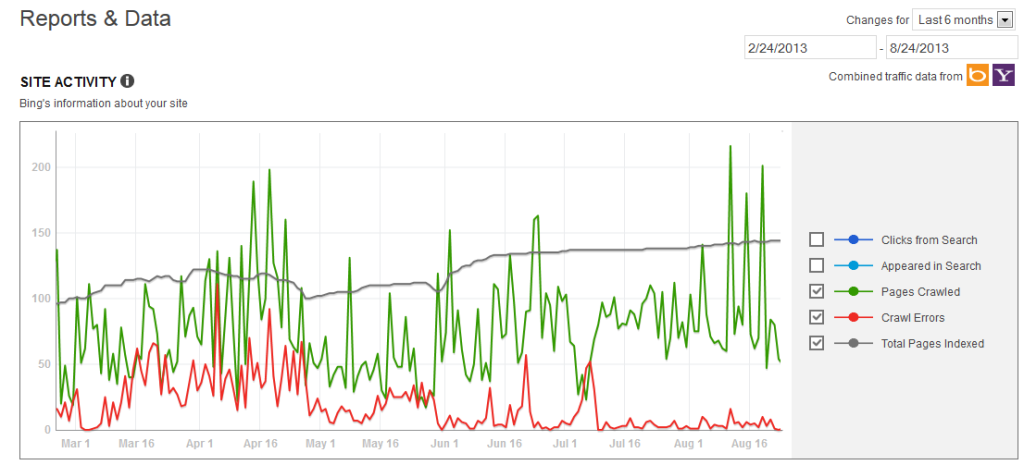

Bing Webmaster Tools Site Activity Report

I pull these reports regularly as a part of my weekly health check.

You’ll note that the GWT report tracks the amount of URLs blocked from crawling via the by robots.txt, so this report helps you look out for the dreaded robots.txt mistakes (which do happen – just yesterday, a client’s developer moved a bunch of articles to a sub-directory that disallowed search engines from crawling it).

You’ll also note that these reports also tell the total number of pages crawled. In Google Webmaster Tools, one good red-flag that unwanted pages are being crawled is when the number of papers crawled exceeds the number of pages indexed several times over.

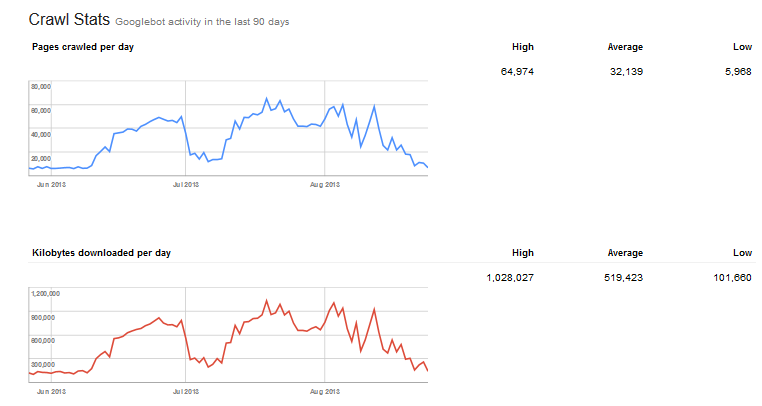

The BWT Site Activity Report and GWT Crawl Stats Report will also help you keep an eye on fluctuations in crawl levels, which could indicate problems such as the unwanted increase or decrease in the number of URLs.

the Google Webmaster Tool Crawl Stats Report

When it comes to crawling and indexation, Webmaster Tools are your best friend, and not using them is the biggest mistake on this list.

6. Neglecting Bing

I’d guess that most webmasters and SEO practitioners don’t use Bing Webmaster Tools at all. I keep saying it, but Bing powers more than enough searches to make it worth your while to ensure your site is being properly crawled and indexed Bing.

7. Not using Sitemaps as a Diagnostic Tool

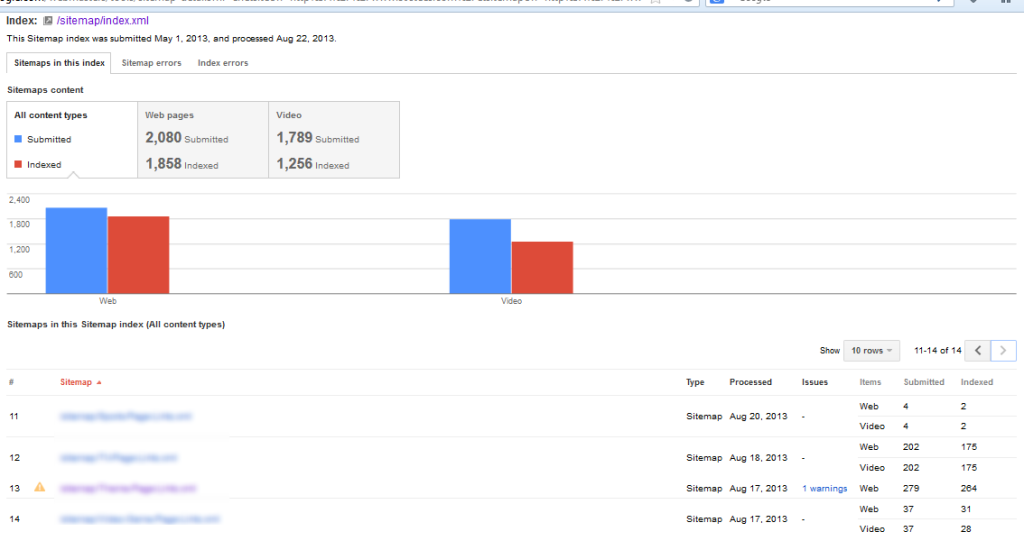

Knowing that more or less URLs are indexed than you’d like isn’t very actionable if you can’t pinpoint the URLs that need love. XML Sitemaps can help you do that.

Often regarded as “just something you do for SEO”, Sitemaps, when done right, can not only help you get the URLs crawled that you want crawled, but also monitor that they are getting indexed and when they are getting crawled.

The tricks are to:

- Make Sitemaps accurate and complete.

- Divide them into smaller, logically structured batches of URLs that help you see the problem areas of your site.

- Check in on which sections are getting indexed less thoruoughly and less often than desired.

Learn more at Building the Ultimate XML Sitemap and Distilled’s Indexation Problems: Diagnosis using Google Webmaster Tools.

8. Not Monitoring 404 Errors

Page Not Found Errors, aka 404s (because they return HTTP status code 404), are when someone (or something) requests a URL, but nothing is there. 404s are a problem when:

- There are users landing on the 404 page, when what they are looking for (or a honestly suitable replacement) is somewhere else, and/or

- There is a lot of link equity flowing to the 404 page.

So don’t ignore them, like many webmasters do. This post offers further insight and decent prospective on 404s.

9. Not Focusing on the 404s that Matter

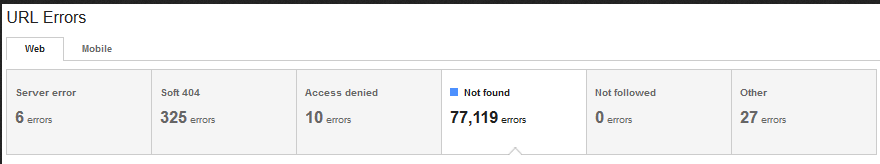

Omg!!! We have 77k 404 errors! We need to hire 11 temps stats!!!

Whoa… Chill buddy. The search engines find 404s on almost every site, and usually most of the 404s are false alarms or outdated data. That report up there is still a good one to go to for keeping an eye on 404s – but mostly for revealing trends and sudden increases.

Though some people will obsess over the Webmaster Tools’ Not Found error reports, there’s simply not enough benefit to try to fix every 404 reported by Webmaster Tools, especially since most of them simply don’t matter. (Further, I’m not a fan of how Webmaster Tools prioritizes the 404s.)

So how do do we find the 404s that matter?

Glad you asked. There’s three methods.

Method #1 – Google Analytics

This is my favorite method, and is all about seeing what 404s are actually being encountered by users. This benefits user-experience, which always spills over into SEO. Further, 404s receiving traffic are likely to have a good deal of link equity, so there’s additional SEO benefit in recovering link equity and funneling it where it belongs. Finally, its not uncommon for most of the 404 pageviews to just a few pages, so GA can be a great way to find the low-hanging 404 fruit.

A good overview of how to use GA to find the 404s is this article by Tim Wilson.

In addition, I have Custom Alerts set on a few client sites to tell me when unique pageviews of 404 pages go over a certain threshhold. Just set the alert to based on pageviews of a segment of pages containing the unique title for the 404 pages, given you’re following the best practices for 404s outlined in that article.

Method #2 – External Link Analysis Tool

If you want to dig in and find 404 pages that are receiving external links, you can use a backlink tool like aHrefs, Open Site Explore (Moz), or Majestic SEO. Majestic SEO is free if you verify ownership of your site. Simply export a report on your sites pages, drill down to 404s in Crawl Results and sort by AC Rank Score (estimated page authority), and you’ll have a list of notable 404s ordered by link equity.

Method #3 – Site Crawling Tool

To find all the 404 pages your site is linking to internally, do a site crawl. My favorite tool for this is Screaming Frog, but Xenu Link Sleuth is free. I also really like to use Screaming Frog to crawl the list of 404s I pull from the tools to verify which are still 404.

During an initial SEO audit, I’ll use all three methods to identify and prioritize 404s. I also check on 404s with each method every month during my monthly deep dives (which have me utilize each tool anyways) , and I glance at Webmaster Tools crawl error data during my weekly checks. Combine this with custom alerts, and you’ll have a total handle on 404s that matter, without spending an eternity on them.

10. Neglecting other URL Crawl Errors

404s get a lot of attention, but there’s a number of other 3-digit numbers that can be a pain in your butt.

- 403s, 500s, and 503s are all non-crawlable.

- Other “not followed” URLs like redirect loops may not be crawlable.

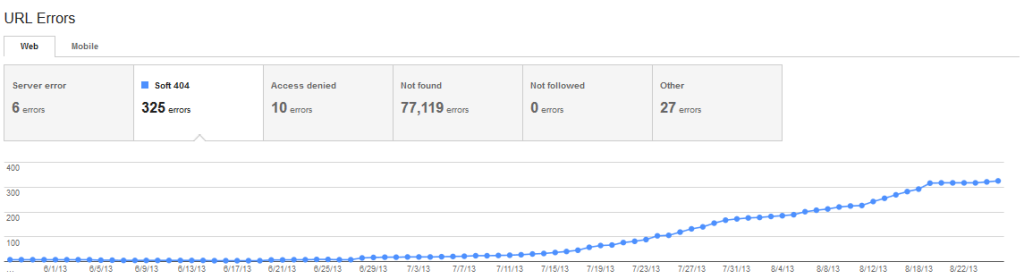

- Soft 404s (shown in GWT only) may be a user-experience and SEO issue.

- 302s (shown in BWT) are temporary redirects and do not pass link equity.

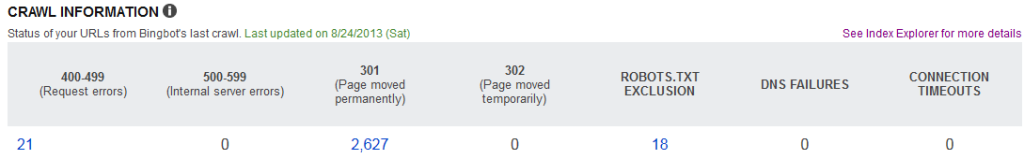

Don’t make the common mistake of neglecting these URL issues, because its plenty easy to keep an eye on them. You can use the Crawl Error reports in both Google and Bing Webmaster Tools and the paid SEO tools also report on crawl errors. I take a snapshot of these reports monthly, and then every week I just quickly compare the weekly numbers to the monthly to see if there’s any changes that raise a red flag.

Google Webmaster Tools Crawl Errors

Bing Webmaster Tools crawl errors

TL;DR

- It’s critical to make some effort to monitor indexation.

- Checking in on the crawl every once in a while is recommended too.

- Monitor indexation with the metric # of pages receiving at least one visit from Bing and Google, as shown in Google Analytics.

- Also monitor indexation with the Pages Indexed metric in Google Webmaster Tools AND Bing Webmaster Tools.

- Time spent on a proper Sitemap, logically sliced into tasty bite-sized pieces, is well-spent if you’re having indexation/crawling issues.

- Keep an eye on 404s, especially in Google Analytics.

- Keep an eye on the other other URL crawl errors using GWT and BWT.

- If you do it right, a little bit goes a long way when monitoring indexation and crawl metrics, and you’ll be able to keep on top of things with just a few minutes a week.

Toodles.