Query Time Data Import In Google Analytics For Historical Reporting

One of the most important things that you can do to enhance your reporting capabilities in Google Analytics is to add custom information to your reports, and query time Data Import is a powerful tool to help you accomplish this. Google Analytics by itself generates a massive amount of information about your website, the users that visit, how they got there, and what content they viewed. However, there’s only so much that Google Analytics can reliably determine with the simple out-of-the-box implementation. Luckily, there are places that we can store custom information, and few different methods for getting the data we want into Google analytics.

What is Data Import?

The Data Import feature in Google Analytics allows you to upload data from other sources and combine it with your Google Analytics data. It’s just one way to get custom information into GA. Other options can include sending information with the hit on the page, or using Measurement Protocol hits, but those have their own technical challenges, pros, and cons.

When you have a lot of information you want to get into GA – data import is the way to go. We’ve written about the Data Import feature in past, check out these posts – how to set it up and how to report on the data you’ve imported.

Published: September 28, 2015

Published: January 13, 2015

There are several types of data you can upload, and the uploading process can be done manually in the GA interface or using the API.

Data Import Behavior

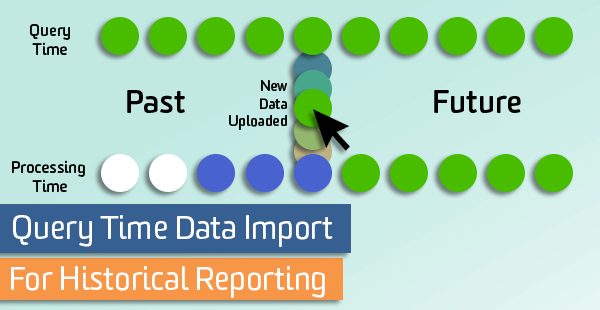

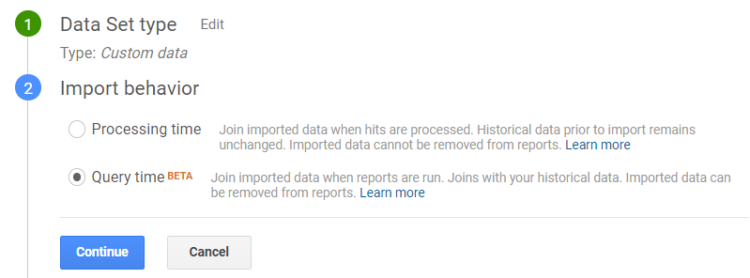

You can choose from two different modes of Data Import: processing time and query time. Processing time mode is available for standard and 360 users, while query time mode is available for Google Analytics 360 only.

Processing time data import works by joining data going forward. As hits come in from your website, GA will check it against your uploaded data, connect any extra data that it finds, and then save this data in your report. Uploaded data is permanently married to your GA data, and it will only be added to hits that are collected after the data import was created. If the data is uploaded incorrectly, you cannot fix it or delete it.

Query time data import works differently. This method will refresh the joined data every time that you request a report, or a “query”, and dynamically connecting your GA data with your uploaded data. This means that whatever you’ll see whatever data was most recently uploaded, even if you select a report and date range that happened before you uploaded the data. This is AMAZING.

What are the Benefits of Query Time Data Import?

For 360 users (or anyone considering 360) query time import has some advantages over processing time mode:

- You can join data retroactively, which allows you to use almost all of the features and reports in the GA interface for historical analysis.

- You can change, fix or delete the uploaded data; it’s only joined with GA data when requested by a report.

Another benefit to using query time import is that you can use the imported data in Data Studio to visualize and measure historic performance. Although Data Studio doesn’t support data joining yet, you can use query time import to join the data within Google Analytics, then visualize in Data Studio.

Data Import Examples

This type of data import works really well when you want to show the current value of something in Google Analytics, and you’re not concerned with keeping historic records in Google Analytics.

Ecommerce

For sites with a huge inventory of products, you could use query time import to upload additional product-specific details. Since you’ll be able to retroactively join these details with products, this is a great option for non-changing values, like color, size, flavor, ISBN, weight, etc., or any other values that would be consistent for a product over time. You probably would not want to use query time import for changing values like price, since a product price can change over time due to discounts or inflation.

Publishers

If you have a large publisher site where content performance metrics are a high priority, you can bring in additional page-level details for analysis, such as category, author, tags, publish date, etc. While you could use processing time mode to upload additional content details later in the day (for example, at midnight, or even automatically every hour), you’d only be able to measure engagement with that article going forward – either the hour after the article is published or the day after.

However, since publishers often see the highest engagement within minutes of publishing a new article or post, you’d potentially be missing data for the peak engagement times with new content. Because query time mode links data historically, it might be a better option to measure total engagement with content from the time that it was published.

Sales

Query time data import can be extremely valuable to sales teams, as well. If you have a CRM like Salesforce, you can upload additional data into Google Analytics to better understand the process that users took to become a qualified lead or sales prospect.

For example, you could upload details like company name, salesperson, industry, etc. into Google Analytics, using the Client ID as the key. You’d need to send this value in both places, so saved in Google Analytics as a custom dimension and into Salesforce, as Dan documents in his post).

Published: June 15, 2016

Because we’re using query time, we know that data will be joined historically; we can see everything that each company (or lead) did on our website in the past – all of the pages they viewed, events they triggered, and conversions. You’ll need to take efforts to make sure you’re not passing in Personally Identifiable Information – we don’t want to upload names, email addresses, phone numbers, etc.

By filtering the lead data by salesperson, you can create a Custom Report that gets automatically emailed to each individual on the sales team. This can be a great reminder for your sales team to follow up with leads who come back to your site after their original visit, especially if you have a longer sales cycle.

SEO and Social

For SEO or social metrics such as shares, likes, or number of links, you could import data using the page URL as the key. To get this social or SEO data, you’d need to harness the power of a tool that could fetch page-level information from social or SEO platforms.

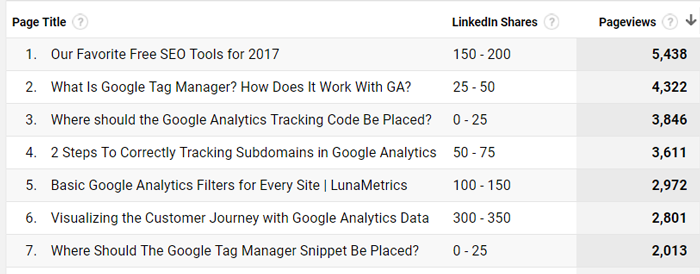

To give you an example, we’ve connected Supermetrics and its Google Drive add-on option to report on content engagement from social sources like LinkedIn. Because we want to automate uploading this social data into Google Analytics, we’re also using a Google Apps script for the data import process (ask a developer to help with this part).

In this example, we can schedule Supermetrics to refresh the total number of LinkedIn shares for each blog post in our Google Sheet; then, using Data Import, we upload that data to Google Analytics every night. Similar to the use case for sales teams, we can schedule automated Custom Reports for each author so they can get the most up-to-date insight into how their content is performing across social media.

Social shares is the perfect example of a field in Google Analytics where we’d prefer to see the most recent data, or what the current number of shares are. Note that we’re uploading these as Custom Dimensions however, which means that they won’t be treated as numbers in GA. Instead of putting just the numbers, which may change by just a few shares each day, we bucketed our results to get a general idea of which blog posts are getting shared most.

Ready to Use Data Import?

There are a few limitations to know about – query time is currently in Beta, and cannot be used with: Cohort reporting, Multi-Channel Funnels, Realtime reporting, or AdWords cost data.

Before you jump into using Data Import (query time or processing), know that it comes with a few considerations! You’ll need to check and double check things like data formatting/schema and upload file size limits. Getting past the technical limitations can take a few rounds, but once you get to a final working upload, the process is relatively quick, especially if you’ve automated it.