Automating Google BigQuery Jobs with Google Analytics

Google BigQuery’s seamless integration with Google Analytics 360 (GA360) and other tools gives users the opportunity to automate repetitive work. Below, we’ll discuss common BigQuery automation use cases and actually walk you through the automation process.

Google BigQuery (BQ) is a "serverless," cloud database and data warehousing solution that’s included as part of the Google Cloud Platform (GCP). BQ gives users the power to store, integrate, and analyze data utilizing its web interface or built-in APIs. If you’re familiar with standard SQL syntax, the learning curve should be relatively low, but it can help to see example use cases and reasons why you may need to use this powerful data warehouse.

Skip to:

The Why: BigQuery Automation Use Cases

BigQuery is undoubtedly one of the most exciting tools of the Google Cloud Platform, especially when it comes to Google Analytics. The Google Analytics 360 reporting interface has limitations, such as the inability to access hit-level data at the user level, and the GA360 native connection to BigQuery empowers users to harness data in ways not otherwise feasible.

The majority of use cases for the Google Marketing Platform (GMP) and other marketing tools can be grouped into 4 categories:

- Reporting: Connect stored data to dashboarding tools (e.g. Tableau, Google Data Studio, Google Sheets, Excel)

- Data Preparation & Manipulation: Clean and transform data into usable formats for reporting and analysis

- Integration: Connect outside data sources that can’t be imported directly into GA (e.g. offline marketing data, personally identifiable information or PII)

- Data Science: This is the pot of gold at the end of the rainbow! Access individual, hit-level data that isn’t available within the GA interface. Use BQ or BigQueryML for complex analysis capabilities, such as audience segmentation or attribution and customer journey modeling.

Set It and Forget It

Reporting, data preparation and manipulation, integration, and data science projects often require repetitive BigQuery tasks, such as running queries or importing data.

Sure, running queries and importing data manually is an option, but why do that? The glory is that users can set up an automated BigQuery job and not have to worry about wasting time with mind-numbing, repetitive tasks.

BQ Automation Use Cases

Reporting

First, you can utilize automated BigQuery jobs to populate dashboards.

Enhanced Cohort Analysis

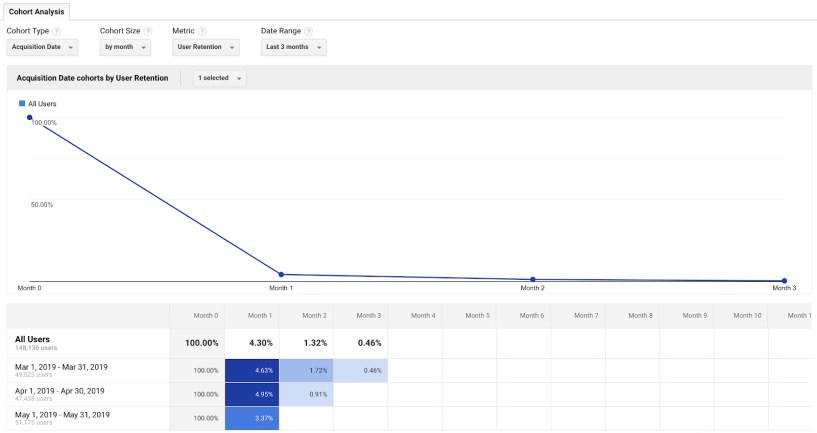

All Google Analytics customers have access to a report called “Cohort Analysis,” currently in beta. This report helps you understand how well users are retained given their acquisition date over a period of time. However, the Cohort Analysis report is very limited as it stands today. For instance, this report only shows up to the past three months of data.

Screenshot from Google Merchandise Store Demo Account (Audience > Cohort Analysis)

We extended the capabilities of the Cohort Analysis report by replicating it in Data Studio using automated BigQuery jobs.

Data Studio is clunky when using it with large datasets, so we utilized BigQuery jobs to pre-process the GA data, store the data in staging tables, and automatically update the report every month as new data becomes available.

Our team greatly expanded the report’s capabilities by adding in cohort group variables and extending the lookback windows:

- Analyze cohorts by Acquisition Date, Channel, Device Type, User Location, User Type

- Extend the lookback window from 3 months to 12 months

Example of Cohort Analysis report in Data Studio backed by BigQuery

Thanks to our work using BigQuery automation, our clients were able to use this report to make business decisions, such as:

- Prioritizing budget for high- and low-performing acquisition channels

- Identifying areas of strength and weakness in retention by user characteristics

Data Preparation & Manipulation

Automated BQ jobs can also come in handy for data pre-processing and transformation.

Pre-Processing Data

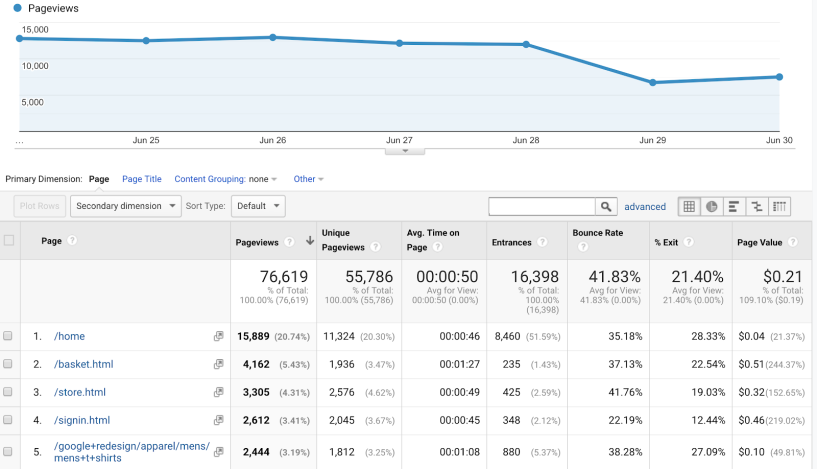

One of the most popular GA reports is the “All Pages” report. All Pages shows important page-level metrics, such as Pageviews, Unique Pageviews, Avg. Time on Page, Entrances, Bounce Rates, Percent Exits, and Page Values.

Screenshot from Demo Account (Behavior > Site Content > All Pages)

Unlike in the GA interface, when data gets exported from Google Analytics to BigQuery, none of the data is aggregated. This is great for complex analyses, but it can be difficult to replace interface report metrics, like All Pages, for carrying out analyses with BigQuery. You can then use the same metrics to create dashboards with much more flexibility.

We put together a guide for calculating GA metrics within BigQuery. Once all of the metrics are calculated, you can automate the query to update daily as new data gets exported from GA to BQ.

Our clients have used pre-processing solutions like this for eliminating the repetitive nature of calculating these metrics each time they’re needed for reporting.

Cleaning Data

Another great example of BigQuery job automation is cleaning your data. Listen, we get it, it’s easy to accidentally apply a filter with the wrong configurations to your GA Reporting View.

Well, what now? All of my Page Titles have extra characters at the end because of a failed Regular Expression. My data won’t be able to merge in my reports, and I will have to manually group the rows together.

We see this all of the time with clients. Thankfully, there is a solution.

We can write a query to eliminate those extra characters and then schedule a BigQuery job to run automatically as more Page Title data becomes available. Now bask in the ability to fuel your report with the clean data. No more manual updates!

Integration

Data has much more potential when it’s not in silos. BigQuery allows you to combine Google Analytics data with other sources that can’t be imported into the GA interface and vice versa. Automating BigQuery jobs allows this process to happen seamlessly.

Connect Offline and Online Data

For example, many retail companies have brick & mortar stores, as well as an online presence and many universities, want to understand the impact of campus recruiting events and online applications. We can connect this traditionally-siloed data automatically within BigQuery by setting up a process to import offline data into the database. This is a highly customized process, dependent on your organization’s set up and resources, and Google Cloud Platform provides a number of tools including Cloud Data Fusion and Cloud Composer for robust processes, BigQuery Data Transfers from a variety of external sources, and other more lightweight solutions for moving data in or out of BigQuery.

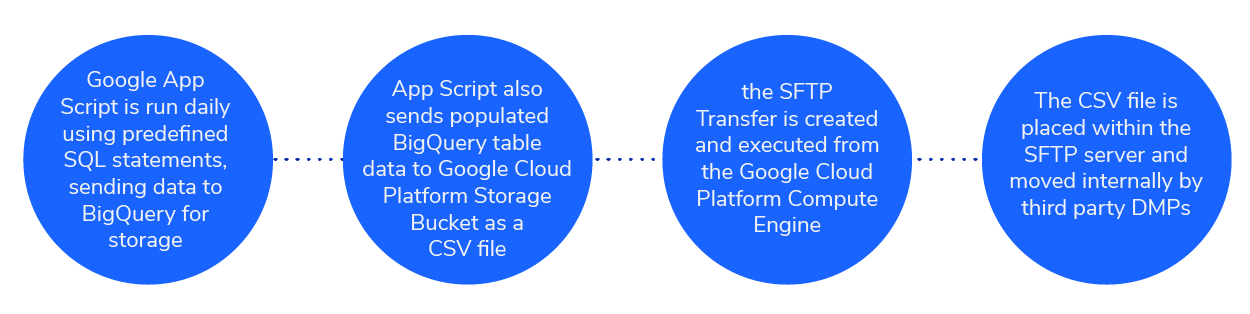

One example of a lightweight solution is to move data from BigQuery to a Google Cloud Storage Bucket using a Google Apps Script or Cloud Functions. You can also set up processes to copy this data from a Compute Engine instance to a third-party SFTP server, for example:

Example flow of BigQuery data to GCP Storage Bucket

Data Science

And last, but not least, BigQuery job automation empowers data scientists to update models as new data becomes available.

User Cluster Assignment Pipeline

Marketers typically target users based on their associated clusters. After user clusters are computed, how are new users added to these groups?

We can use scheduled BigQuery jobs to create a pipeline for assigning users to clusters based on their behavior.

Clients can then continue to engage their users, even after the preliminary analysis is completed. Here are some examples where we could automate user assignment:

- Leading Hotels of the World (LHW): identifying and targeting users based on hotel amenities interests

- Teach for America (TFA): identifying and targeting qualified applicants

- PBS: optimizing site features based on audience segments

We’re digging into other BigQuery capabilities with GCP integrations, including work with BigQueryML and AI Platform Notebook.

The How: Steps to Automate BigQuery Jobs

Configuring automated BigQuery jobs is a relatively simple process. Follow along with the steps below to eliminate some of your most repetitive BQ tasks.

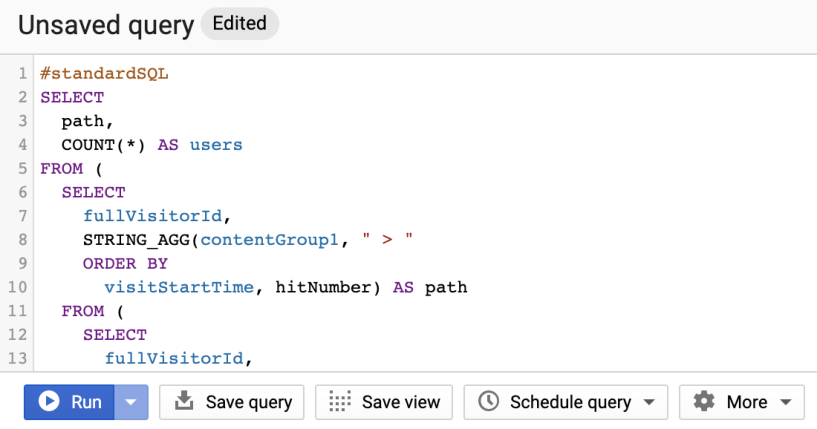

Step 1: Write Query in Editor

Your first step is to write your query in the Query Editor. You will not be able to access the “Schedule query” options without a valid query in the Editor.

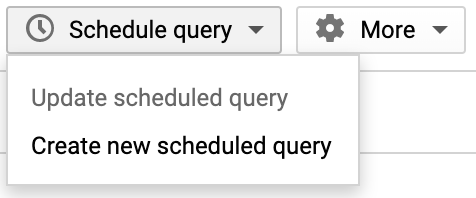

Step 2: Click Schedule Query Option

Click on the “Schedule query” dropdown, and select the “Create new scheduled query” option.

Step 3: Configure Scheduled Query

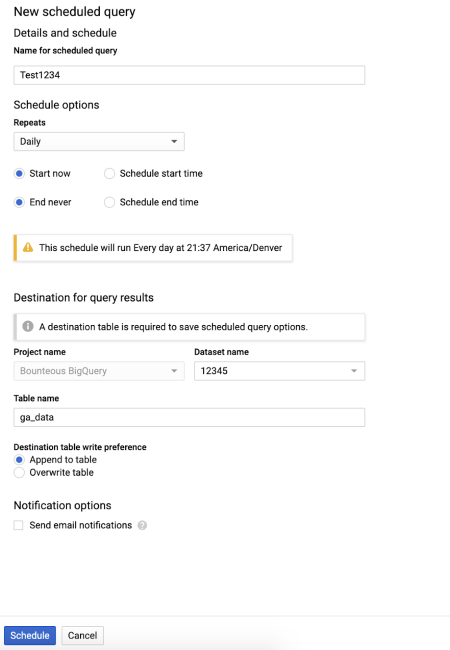

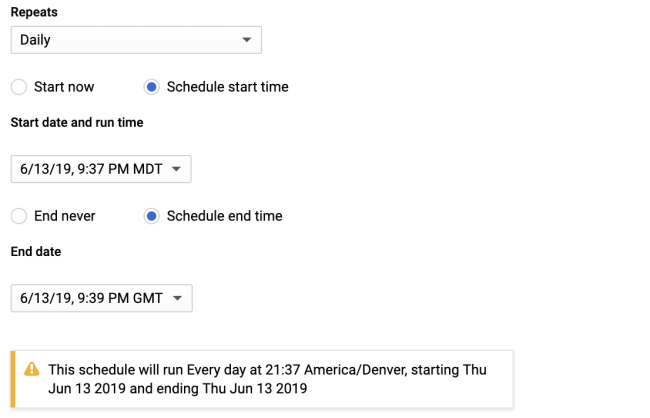

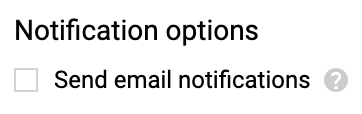

After clicking on the “Create new schedule query option,” a form should pop up called “New scheduled query.” This is where we’ll configure our scheduled query.

When filling out the form, make sure to edit at least the following required fields:

- Name for scheduled query

- Table name

Configuration Settings

Repeat Frequency

Queries can be scheduled to repeat Daily, Weekly, and Monthly. They can also be set to repeat on a custom schedule, where you can easily control dates/times, and there is an On-Demand option to programmatically trigger the query.

Append or Overwrite Data

The “Destination table write preference” is an important configuration. You can either set the query to append to a table or overwrite the existing table.

When deciding which option to choose, think about the scheduled query use case and ask yourself if you need the historical data in the table or if you only want the latest data.

Notifications

Email notifications are great for confirmation that your scheduled query ran successfully. If there is an error, you will be able to act quickly. We highly recommend checking this box and making sure a person is assigned to follow up on any issues right away.

And that’s it! You’ve got yourself a scheduled query that you can leverage to solve your reporting, data preparation and manipulation, integration, and data science challenges.

Step 4: Schedule Query

Once all of the settings are configured, hit the blue “Schedule” button in the bottom left-hand corner. Congratulations! Your query is scheduled.

What’s Next?

The use cases for Google BigQuery are endless. All Google Analytics 360 customers should be exploring the ways that BigQuery and automation can help save them time and add to their bottom line. The BigQuery resource section is pretty in-depth, with lots of great technical items. Our own collection of articles about BigQuery can be helpful for BigQuery business cases especially when combined with Google Analytics. If you're more of a hands-on learner and need to know the how in addition to the what, check out our public BigQuery trainings to get hands-on practice and the opportunity to get answers to your biggest BQ challenges.