How To Use Google Webmaster Tools For SEO

Google Webmaster Tools is a sweet suite of Google SEO tools that provides data and configuration control for your site in Google. If you’re doing any SEO and you don’t find value in GWT, you either use a paid tool that re-uses GWT data or you have an untapped gold-mine.

There’s a ton you can do with GWT, but it can take a while to learn how to get great return on the time you spend with it. To that end, I’ve tried my best to assemble a meaty, practical collection of actionable tips on the reports I’ve found most useful.

General Tips

Getting Started

Verify Every Site Version

Site Messages

Use the Help Files

Search Queries *old report (pre 5-6-15)

Limitations

Cool Insights

Hacks

Change of Address

Structured Data

Data Highlighter

HTML Improvements

Sitelinks

Links to Your Site

Mobile Usability

Index Status

Crawl Errors

Crawl Stats

Fetch as Google

Sitemaps

URL Parameters

Getting Started

If you haven’t set up Google Webmaster Tools yet, do so yesterday. It’s really easy and worthwhile. Just go to www.google.com/webmasters/tools, sign in with your Google account, and click add a site. Then you’ll be provided with several options to verify that you manage the site. Use the option that’s easiest and make it happen.

Add Every Site Version

The biggest mistake that I see people make with GWT is failing to add every version of every site they manage. It’s unfortunate, because it’s very easy to do. Failure to add every version of every site will result in data for only some of your site(s) ― at best, this stifles insights; at worst, this can cause you to make costly errors or neglect critical issues.

Obviously, if you have the domain thingamabobs.com and a domain called whatchamacallits.com, you should add both root domains.

You should also add all subdomains. If you have the subdomain http://red.thingamabobs.com and the subdomain http://www.thingamabobs.com, add them both. If you only add http://www.thingamabobs.com, that’s all GWT will track; and that’s all the data you’ll get.

If you have http://thingamabobs.com and http://www.thingamabobs.com, add them both. (Then fix your duplicate content issue).

If you have https://www.thingamabobs.com and http://www.thingamabobs.com, add them both.

Basically, if you can change what’s to the left of your root domain and still get a live page when you enter the URL in the browser bar, then add that subdomain. Also add any subdirectories that target specific countries. Google explains the versions you can add here.

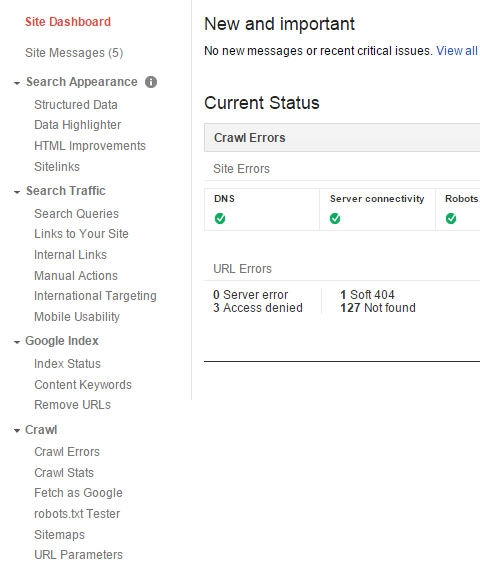

Site Messages

Occasionally, Google Webmaster Tools will notify you if your site seems to have a very important issue. Make sure you set up the messaging forwarding so you can get these notifications emailed. The emails may inform you about some problems accessing the site, increases in crawl errors, unnatural link warnings, malware alerts, and more.

One caveat is that there can be a delay between the time the problem arises and the time you get notified. Another caveat is that there are plenty of bad problems GWT won’t notify you on. You definitely want to really pay mind to GWT emails, but they are merely an additional line of defense ― not a replacement for any other risk-mitigation measures.

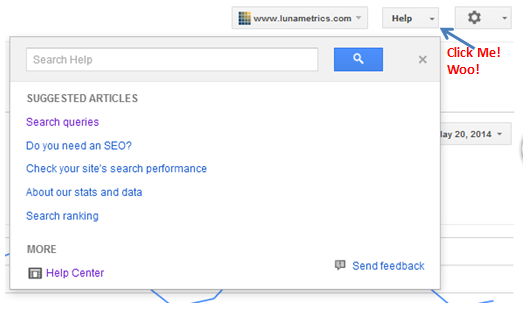

Use the Help Files

Google has a lot of resources on SEO, and good practitioners gobble as much of it as they can. The Google Webmaster Tools Help Center is a treasure trove.

Make sure you use GWT with an inquisitive mindset. Most of the reports have limits, caveats, and nuances. Before you go rushing off to make a major decision, make sure the data is what you think it is and means what you think it means. Often, GWT data leads to important but unconfirmed hypotheses that you need to investigate.

Also, if you click the help button, you may get a quality, relevant suggested help article. So do that frequently. The articles are pretty good at explaining what the various data-types in reports actually are (insomuch as Google is willing to share).

However, the articles are frequently dry and lacking in pragmatic insights on what to focus on. Plus, there’s very little pictures. (We’re expected to just read words on the interwebs? Come on.)

The GWT reports help files can also be a bit inconsistent. I looked through the related help files for each GWT report I cover below, and I’ve listed the most helpful ones.

Be sure to click on those little question marks a bunch too.

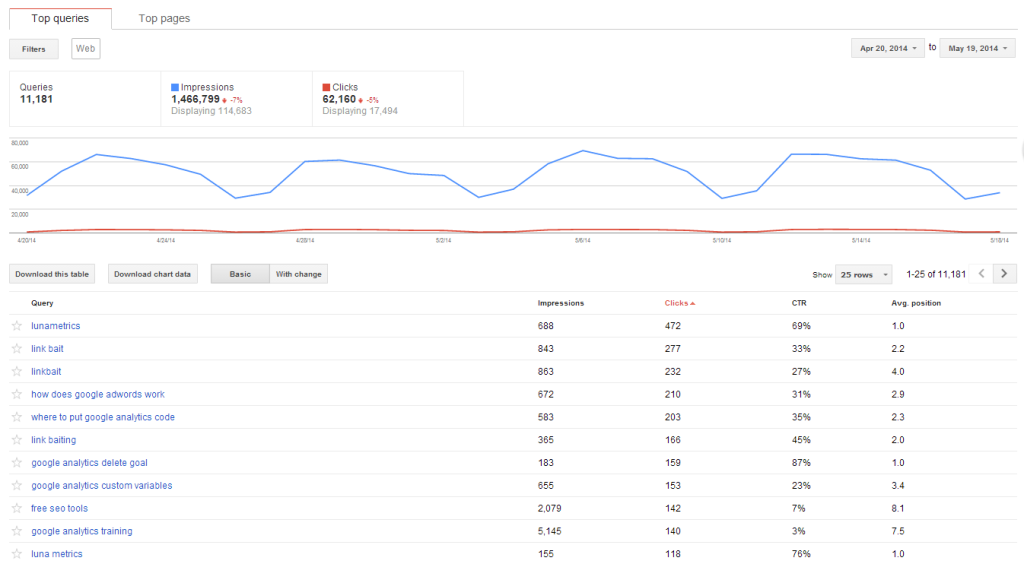

Search Queries Reports

Author’s note: On 5/6/15, Google officially rolled out a vastly improved version of this report called the Search Analytics Report (in beta). A detailed help article on the new report is here. Please note that this section of this guide pertains to the old report and is out of date at the moment.

GWT help article here (for old “Search Queries” report)

This is the gem of the “Search Traffic Section” ― heck, it’s the gem of all of Google Webmaster Tools, and the data in this report can be found repackaged in many paid SEO tools suites. This report has some (nothing is truly comprehensive) data on the following:

- impressions

- clicks

- click-thru-rate

- rankings

That data can be displayed against the following dimensions: 1) keyword, 2) landing page, and 3) keyword/landing page.

You can then filter data by location (but only certain countries) and Google search vertical (regular web, image, mobile, video, or news). And you can download that data and have a field day.

Sweet, right? Well, naturally there are some…

Limitations and Caveats of the Search Queries Report

This data has gotten extremely popular as folks must compensate for missing data on keyword performance due to keywords (not provided) in Google Analytics. The search query data seems to have gotten much more reliable too. And more specific.

Unfortunately, while Search Queries are one of the most important ways to fill in the (not provided) void, Search Queries data is far from a complete replacement for the (not provided) keywords. Why?

- There’s no engagement or conversion metrics.

- You don’t have all rich secondary dimensions like “metro area” or “time of day” like in Analytics.

- Not every keyword is shown (not even close).

- A click in this report is technically different than a visit (session) in Google Analytics.

- Historical data only goes back 3 months (a workaround is below).

Additionally, one should be aware of some caveats:

- The Image search vertical gets many times more impressions than web search, due to many more listings per page. Never analyze CTR or impressions for “All” verticals simultaneously ―always analyze Web, Image, Mobile, or Video separately.

- I’ve seen instances where multiple listings for a single keyword appears to multiply impressions, driving CTR down unnaturally (or example, having two totally unique listings for a keyword may double impressions, cutting CTR in half.) Sitelinks do not appear to multiply impressions though.

- The expected CTR varies widely depending on the scenario, so take care in benchmarking.

- Outliers. All the metrics can be prone to unexpected results. For instances, Avg. position can be massively impacted by uncommon personalization of results. Also, I am unaware if there is any accounting for multiple clicks or views by the same user, or if bots are taken into consideration. That said, the more clicks a keyword has, the less you need to need to worry about outliers, generally speaking.

New Search Queries report in development― Google announced January 27 that it is working on a new early alpha version of Search Queries. If you want to be a guinea pig, you can request to preview it here.

Cool Insights with Search Queries

Despite the caveats, there’s a ton you can do with Search Queries. Obviously, it’s very good to know which keywords people are Googling to get to your site.

And you probably know how to make use of rankings data. By the way, GWT “avg. position” data has been demonstrated to be relatively consistent with other ranking-checking methods, .

Below are a few other fun insights.

Non-HTML Pages

I find that many, if not most, web marketers have never looked at search engine traffic data on PDFs and other downloads.

Out of the box, Google Analytics can only pull data on HTML pages. Well, one method (here’s more) to get more data on non-HTML pages is the Search Query report. And this is the best way to get keyword-level data on non-HTML pages. Just bust out your ctrl+f and look for the filetype extension (.pdf, .doc, etc…) in the URL.

Image and video SEO

It can be useful to look at web-only (regular) queries, image-only queries, and video-only queries. This data can explain weird things you might see in Analytics.

For example, a quick look at image-only queries revealed why we (still) get a lot of low-quality traffic to a random old blog post about naming the then new office pet.

Rest in piece Link Bait

Image and video data can also help you determine the need for and effectiveness of SEO for images and SEO for video.

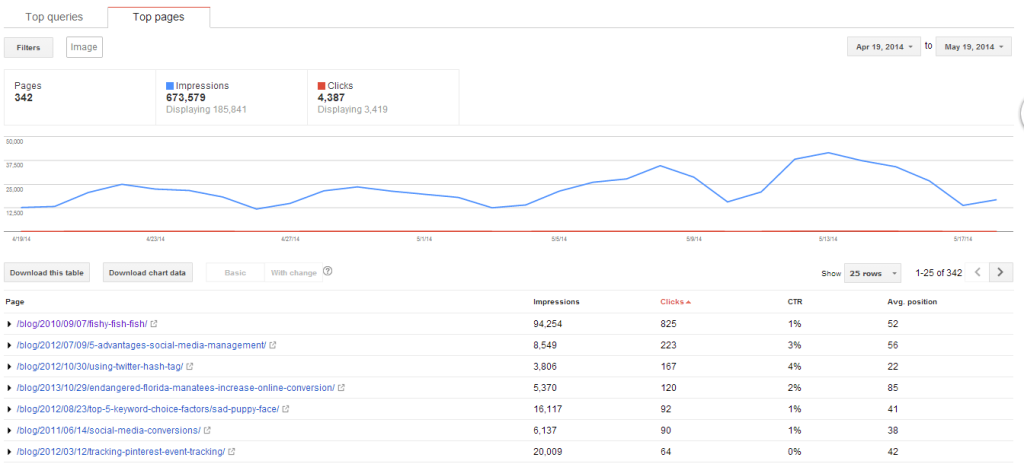

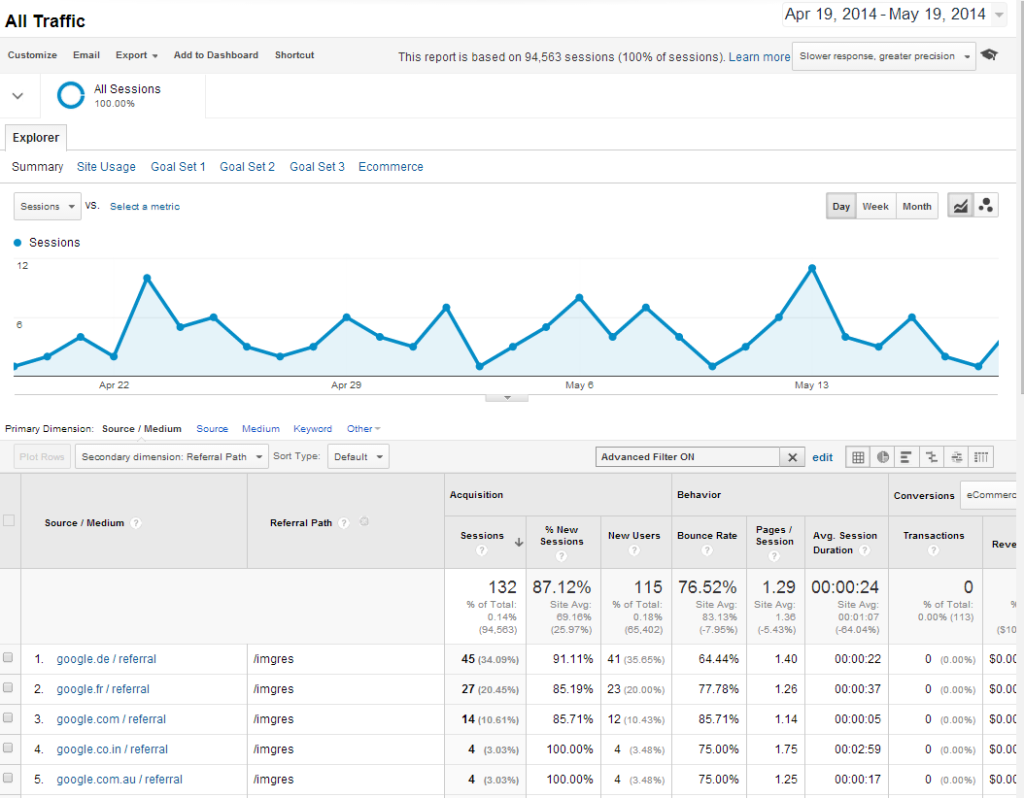

A very important thing to remember is that clicks do not equal visits ― especially for images. Whenever you compare GWT clicks to images to visits by image in Analytics you get wildly different results. Image clicks will be waaay more than image visits. Below, you’ll see those 4,000+ image clicks resulted in only 132 sessions in our site (these sessions do not include visits to only the image file URL; these are only sessions on HTML pages.)

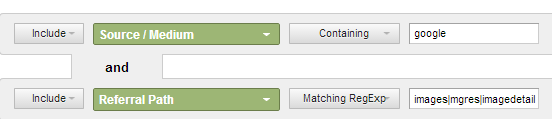

To find Google image traffic in Analytics:

- Go to Acquisition -> All Traffic.

- Set the advanced filter to Source Contains Google and Referral Path Matching RegExp images|mgres|imagedetail.

AJ Kohn has more details on tracking image search in Analytics.

I haven’t verified 100%, but I’m almost positive that GWT is counting any click on the image SERP, not just clicks to your site. On a related note, a change in the image SERPs in 2013 drastically decreased Google image traffic for everyone.

Another thing to note is that the image data has incredibly high impressions and low CTR compared to the other verticals, so it can really skew your data if you are viewing All search queries.

Mobile vs. Web

Mobile users often do different kinds of searches than non-mobile. For example, mobile users are more likely to be looking for a business near them. Use the Search Query data to get insight on how mobile and non-mobile Google users search differently to wind up on your site.

Another question to ask is “Are rankings are drastically different for the same keyword on mobile vs. non-mobile?” If you rule out that mobile image or video results are not skewing the data, then maybe it’s possible your page ranks lower on mobile. While it’s probably them (Google) and not you, make sure you’re not making any big mobile SEO mistakes.

CTR Analysis

Looking at click-thru rate can reveal a number of opportunities and insights.

First, CTR data will help you understand the relationship between rank position and clicks.

Second, CTRs can also help you understand the SERPs for your niche. Often the CTR is highly dependent on external factors such as competition, number of advertisers, and amount of specialized results (like rich snippets, local carousels, images, etc…). Understanding which search queries tend to have lower CTR in your niche can help inform your future keyword research and SEO strategy.

Third, CTR may help tell you if your page is what the people are looking for. For example, we ranked #1 for “link bait” despite having vastly inferior backlink metrics to other articles on the topic. It appears our ranking was driven by our CTR being well above average. My theory is that most people Googling “link bait” just want to know what the term means and that the title of our page seems to users to be most likely to be the straightforward answer.

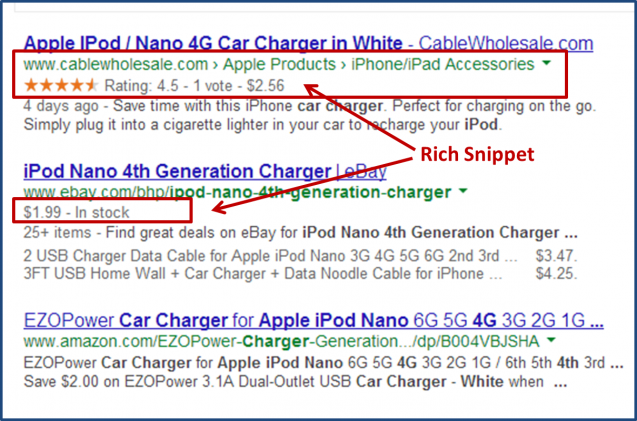

Fourth, sometimes the organic CTR is something you have some direct control over; and you want to find opportunities to directly improve CTRs. Below-average click-thrus may indicate an opportunity to employ rich snippets or tweak Meta descriptions.

Another important insight is the CTR of searches for your brand. While it will never be near 100%, you usually want to get it as high as it will go. See if you should try to win more real estate in the SERPs.

Finally, combine CTR analysis in both GWT and AdWords to gauge total CTR. This can aid in AdWords decisions, such as bidding.

Benchmarking CTR is required for the above analyses. You could look to the varying results of external studies for a figure on average CTR. If you ask me, my take-with-a-grain-of-salt go-to “average CTR” for the #1 position is 30%, but I’m sure you’d get a dozen answers if you asked a dozen SEOs.

You could also take the average CTR for your data. First, export the search query data into Excel. Then isolate a bucket of queries for a given rank (all queries for position 1, for example). Then take the average for the bucket.

A third benchmarking method is simply to note current CTR and aim to improve upon it.

Search Queries Hacks

Integrate GWT into GA

Viewing GWT Search Query data in Google Analytics (GA) is super easy. All it requires is for the admin of both GA and GWT to log into GA and, in the left nav, go to Acquisition -> Search Engine Optimization -> Landing Pages.

If you’ve never connected GA and GWT, you’ll see a screen that states “This report requires Webmaster Tools to be enabled.” Simply click the set-up button and follow the easy instructions.

But there are limits.

One limitation of connecting the accounts is that you can only connect one GWT account to one GA account, and a GWT account can only be for one subdomain. So, if you have multiple subdomains, an individual GA view will only display some of your GWT query data. Another limitation is you can’t view Search Query data by landing page together in GA. These limitations can be overcome by viewing the data directly in GWT.

Export keyword by landing page

Viewing keyword data without landing page data is like having chocolate without more chocolate. Unfortunately, GWT doesn’t let you download the search query data by landing page without clicking on every landing page in the report.

Well, Noah has created a great bookmarklet that will automatically “click” on every landing page to reveal the search queries and then download it. So now you can have double chocolate. (OMG.)

Automatic exports

One problem with the Search Queries report is that it only goes back 90 days. That’s no good if you love historical data like I do. The obvious solution is to export it periodically, but this is a pain to constantly do manually. Fortunately, you can automate downloads: here’s a PHP method and a Python method.

Other GWT Reports

Change of Address

GWT help article here.

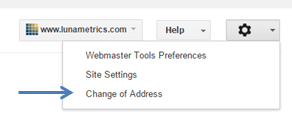

Unlike the rest of the reports described below, the Change of Address tool is not located in the left menu. It can be found in the top right. There are three main things to know:

- If you’re changing your domain name, submitting a change of address here with Google is essential (likewise for Bing).

- Never, ever submit a Change of Address unless you are actually changing the domain name for your entire site.

- Carefully follow all the steps Google gives you on the Change of Address Page. Google’s

(More major migration tips here, btw).

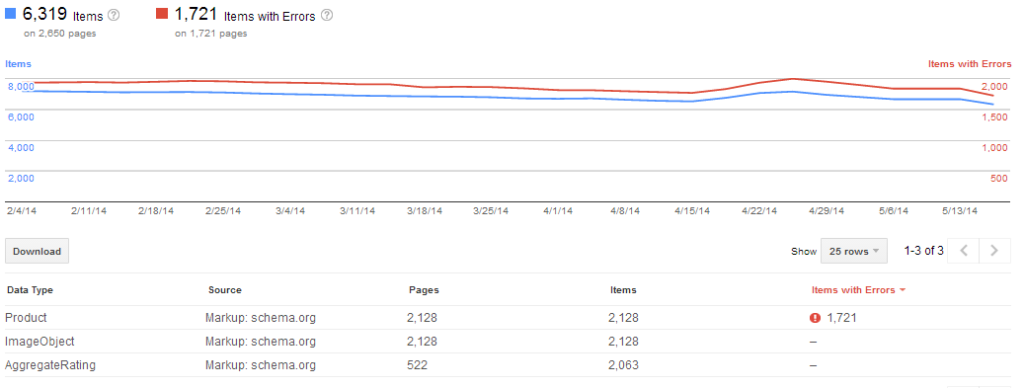

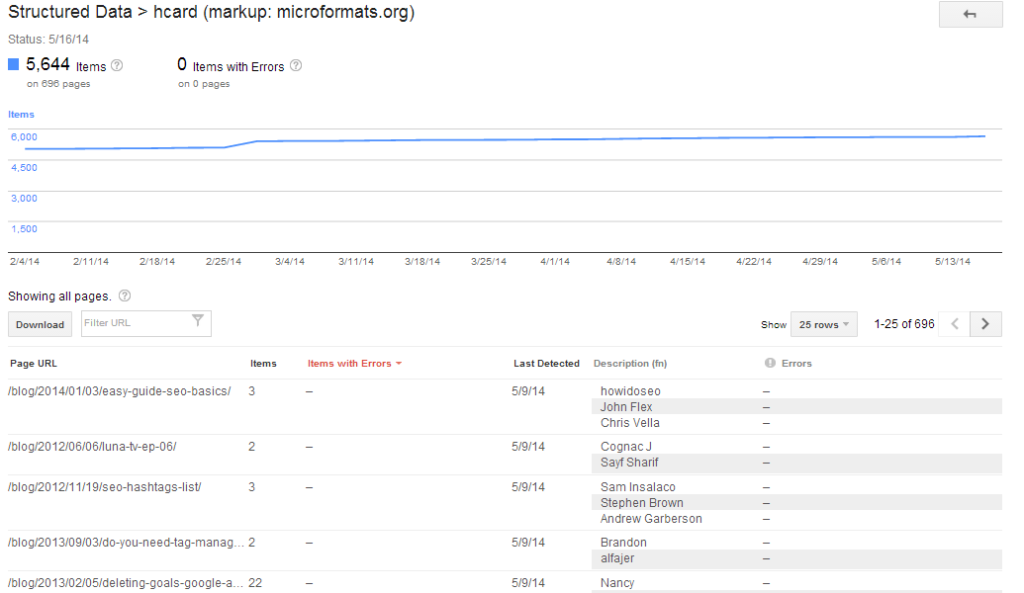

Structured Data

GWT help article here.

Hopefully, you know by now how Google uses schema.org markup to inform rich snippets that get displayed in the search results pages as recipes, reviews, and much more. And, that by implementing the right data markup, you can hope to trigger the data on your site to display as these rich snippets and dramatically improve click-through-rates for a notable bump in search traffic.

If structured data matters much to you, the GWT Structured Data report is essential.

By viewing stats on structured data for your site as a whole and by type of data, you can verify that Google is picking up structured data.

You can also get nice details on the individual data pieces being picked up and also on errors.

If the numbers and data don’t seem to match what you hope to expect, start diagnosing by looking for errors. Then, find a page that should be triggering a rich snippet but isn’t and test it on GWT’s handy-dandy Rich Snippets Testing Tool.

Data Highlighter

Excellent GWT help articles here. Nice article by Portent here.

The Data Highlighter is a tool that basically tells Google the same things schema.org markup would. The Data Highlighter is very user-friendly and can be used to tag at least 9 types of data, and every tag corresponds with schema.org markup (for example, using the highlighter for Events is equivalent in Google’s eyes to markup with schema.org/Event).

I haven’t used the Data Highlighter much myself. Whenever feasible, I prefer getting schema.org markup actually coded onto a page’s HTML, because the Data Highlighter is only seen by Google, and does not help Bing, Yahoo, and other search engines. It’s also not as robust as hard-coded schema.org and is known for being a little quirky.

That said, you should definitely familiarize yourself with the Highlighter’s supported data types. If hard-coding schema is not practical, take the Data Highlighter for a spin. It’s a great way to win rich snippets with little initial effort without a developer or plugin.

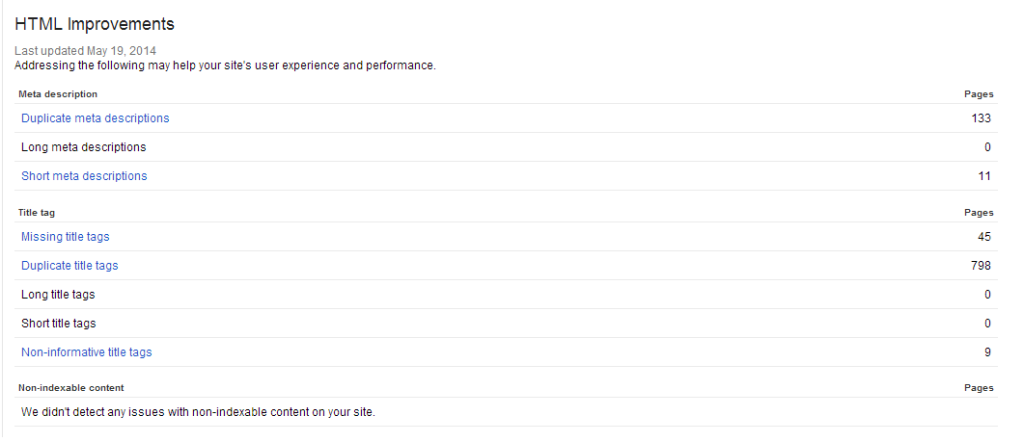

HTML Improvements

The HTML improvements section can not only help you improve the appearance of your SERP listings, but also help you find opportunities to address keyword optimization and duplicate content issues.

Find title tags and Meta descriptions that need to be fixed.

However, the HTML Improvements report will do a good job flagging pages that don’t conform to the following best practices for Title tags and Meta descriptions:

- Have a unique one for each page.

- Don’t make it too long or it will get truncated.

- Be informative.

Sniff out duplicate content.

As you likely know, it is generally a bad practice to have pages that do not contain content unique to that page. The first step in dealing with duplicate content problems is identifying them, and GWT offers one way of doing so that is too simple to ignore ― simply check for duplicate Title tags and Meta descriptions.

Find out which pages share which Title tags and, if there’s a lot of duplicate Titles, download the data so you can play around with it in Excel. There’s a good chance the URLs are duplicates. While there are other good ways of finding duplicate content (like with Screaming Frog), this method’s benefit is that it will show you some duplicate content Google has indexed.

Caveat on non-indexable content data

I’ve worked on countless sites that have content that appears not to be indexed or even properly read by search engines that is not reflected in the “non-indexable content” data. I really have no idea what a page has to flagged here (I’d love to hear insights if anyone knows). I almost always see GWT say “we didn’t detect any issues with non-indexable content” ― even when it seems that would be incorrect. So use caution.

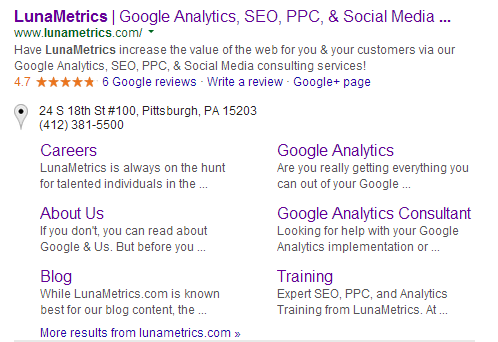

Sitelinks

Google your brand. Now do it on private browsing. Assuming you have sitelinks, do you like them? Occasionally, the sitelinks can link to pages that convert poorly or offer suboptimal UX. If you don’t like a sitelink, you can demote the Sitelink to reduce the chances of it appearing.

If you have a lot of branded traffic and a crappy sitelink or two, this is a big and easy win. The most common big win scenario I see is when a site was getting a lot of traffic to a page that has suddenly become dated (for example, a seasonal or out-of-stock product).

Just make sure this is the right thing to do. Factor in the possible impact of personalization, location, and device on the sitelinks you observe ― what you see may not be what everyone sees, and what you don’t want to see may be something some people do want to see. Also, if it happens that the vast majority of Google traffic to a page is coming through a sitelink (which you can determine by analyzing the page in the Search Queries and noting how many clicks are from branded queries), then you can guess on conversion and engagement for the sitelink in a Google Analytics landing page report filtered for only Google organic traffic.

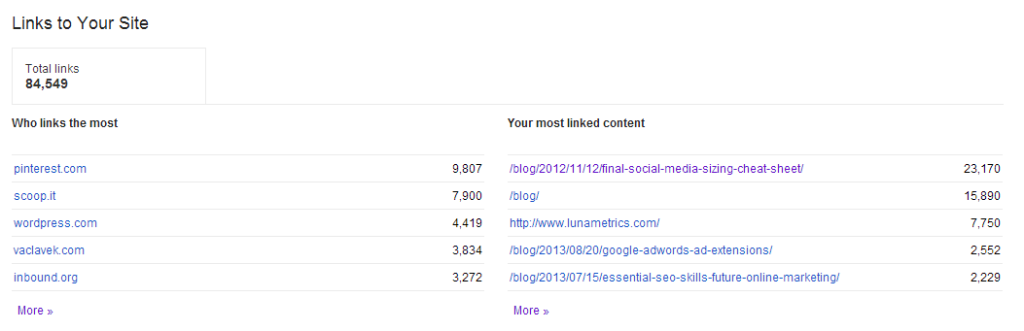

Links to Your Site

Links to Your Site give you data on who links to which pages on your site. Since links remain the most important component of the Google’s algorithm, understanding backlinks to your site is important in understanding how to improve your rankings capabilities.

I don’t use Links to Your Site much these days, because paid link data tools have more actionable insights. We use Open Site Explorer by Moz. ahrefs is another link data tool. Majestic SEO is a third option, and has the largest database among the premium link tools.

If you don’t have a paid tool, then GWT is very much worth your while. You also should examine the free limited versions of the three above-mentioned tools. You should check out the Inbound Links report of Bing Webmaster Tools, which I believe has higher limits on how many links it will report (or, at the least, documentation on its limits).

Who links the most

Nobody crawls the web as deep as Google does. GWT might have data on some links when the other tools don’t. That said, GWT doesn’t always display all the links Google knows about (I’m not sure what the quantity cap actually is, but you may be able to get more than 1,000 domains if you download the data). You can download the linking domains and check to see if a given domain is linking to you. This can be useful if you really want to see if a specific site links to you or if you just want to see what the other link tools are missing (typically the dirty underbelly of the interwebs).

You might also note if the quantity of linking domains is growing from month to month.

The data from “download more sample links” or “download latest links” is very noisy; I find I need to scrub out links from the same subdomain in Excel to get any use out of it.

Your most linked content

There’s a solid chance you can use this report to find your inbound-linked-to pages you won’t find elsewhere. Seeing which pages pull in the most links and why is my favorite thing I do when analyzing link-winning strategy. I don’t use GWT for this much, but it can help if you have a site that doesn’t get a ton of backlinks and every little link matters.

How your data is linked

While anchor text isn’t as critical to rankings as it used to be, it’s still worth looking at now and then. Unfortunately, the GWT report only lists up to 200 phrases.

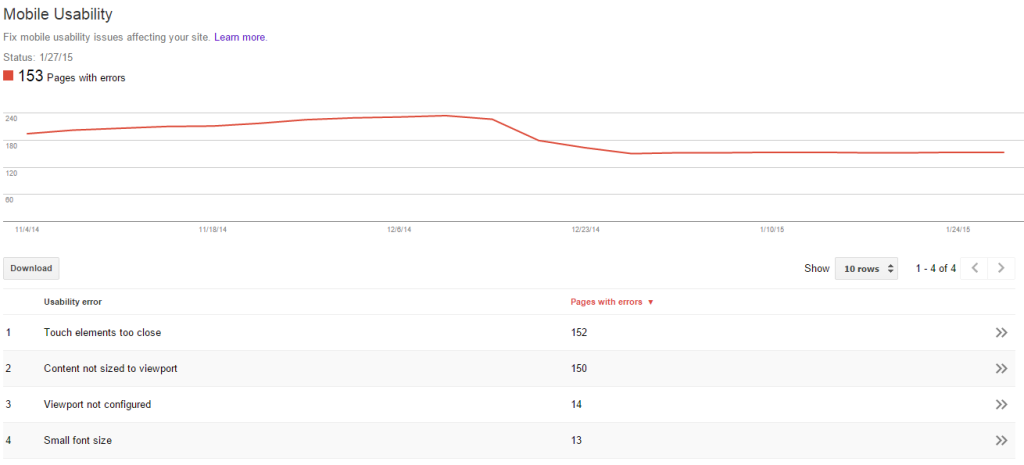

Mobile Usability

GWT help article here.

The year of mobile is no longer next year. As you may have heard in 2014, mobile internet usage exceeds that of desktop in the U.S, and mobile is the most popular form of any media worldwide.

In January 2015, it was reported that Google has been sending many mobile usability warnings to webmasters; that article also noted the many signs a new mobile ranking algorithm is incoming (count me among the bandwagon riders who feel mobile UX will be a ranking factor). Certainly, Google has been making a serious effort to communicate mobile SEO best practices.

This all underscores the importance of the new Mobile Usability report, which Google announced in late October 2014. It lists the following mobile UX issues (links go to Google’s associated lit on best practices):

- Flash content

- tiny fonts

- fixed-width viewport (the viewport is a meta tag that tells browsers how to size a page)

- missing viewport

- content not sized to viewport

- clickable links/buttons too close

The report lists URLs that contain a given error. The list does not appear to be comprehensive ― that is, that not every URL is reported ― but there should be more than enough reported errors for diagnostics.

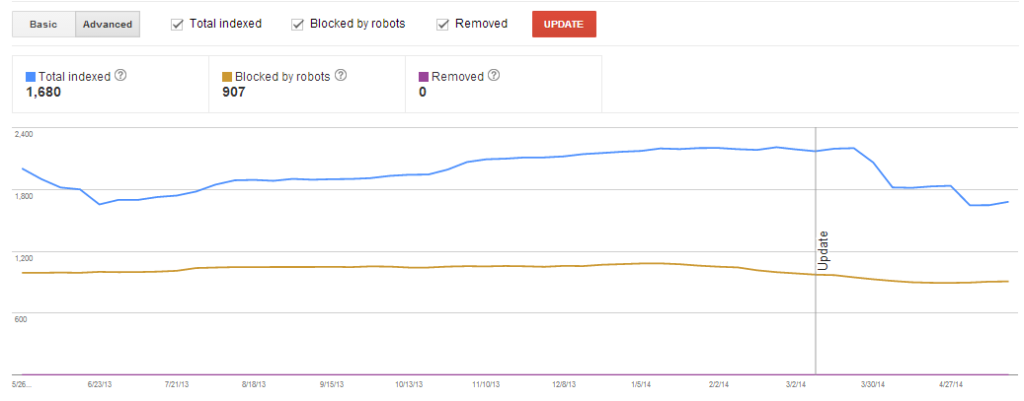

Index Status

GWT help article here.

Index bloat is one of the most common problems SEOs deal with. When Google has way more pages indexed then deserve to be organic landing pages, the consequent dissipation of link juice and constrained crawl budget can have a significant impact on SEO traffic.

The converse of index bloat is when pages that should be indexed are not indexed, and this is an equally important problem. There’s no shortage of horror stories of a site’s organic traffic dying because indexation was blocked via a problem with something like robots.txt, Meta robots, rel=canonical, or nofollow attributes. Often, when these issues are in their early stages, the impact on traffic is not yet apparent.

Check the Advanced Index Status report and examine total pages indexed, the number of pages removed, and the number of pages blocked by robots.txt. If any numbers have moved in a way you wouldn’t expect, investigate immediately.

For more information on crawling and indexation metrics, read this.

Crawl Errors

GWT help article here. (links to articles on specific types of errors on right)

404s

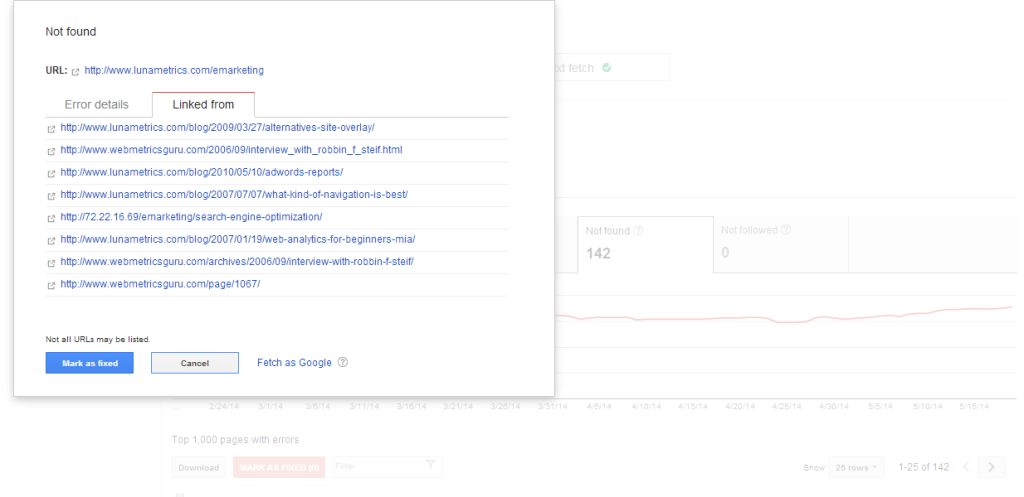

A 404 is the HTTP status code for Page Not Found. This error occurs whenever there is no page for the URL requested. Webmaster Tools reports 404 errors whenever Google’s spider crawls a link to a URL that has no actual page associated with it. Common causes of 404s include typos in the destination URL of a link and failure to redirect the URL of a page that was moved or deleted. Both causes of 404s can be detrimental to both the user experience and your SEO endeavors.

Note that many GWT 404s are outdated, “false alarms”, or triggered by bad links from insignificant pages no one ever visits. These may not represent any significant inconvenience to your users or wastage of link juice, but many 404s will be problematic. Click on the URL to see the site’s linking if you suspect the 404 may be a problem.

Resolve problem 404s by 301 redirecting to the appropriate page, by changing the destination URL of the inbound link, or by restoring content to the 404, depending on what is most practical and most beneficial to your users.

Note that if you utilize the “MARK AS FIXED” button, you will have more up-to-date data.

This post explains the right perspective on 404s. While the GWT report is super useful for trends and is a great data point, I often look to some other data points like Google Analytic for taking action on 404s (see #9 in SEO Measurement Mistakes Part 3: Crawling and Indexation Metrics).

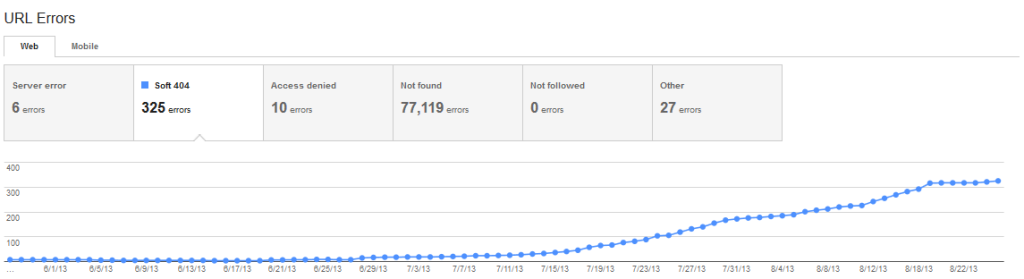

Soft 404s and Other Crawl Errors

404s get a lot of attention, but there are other crawl errors that can impact user-experience and SEO. For example 403s, 500s, and 503s are all non-crawlable. Other “not followed” URLs like redirect loops may not be crawlable. Google Webmaster Tools reports on all these.

Soft 404s are a user-experience and SEO issue, and GWT can be the best way to find them non-manually (though some might not actually be soft 404s).

However, GWT does not report on crawl issues like misplaced meta robots tags or 302 redirects.

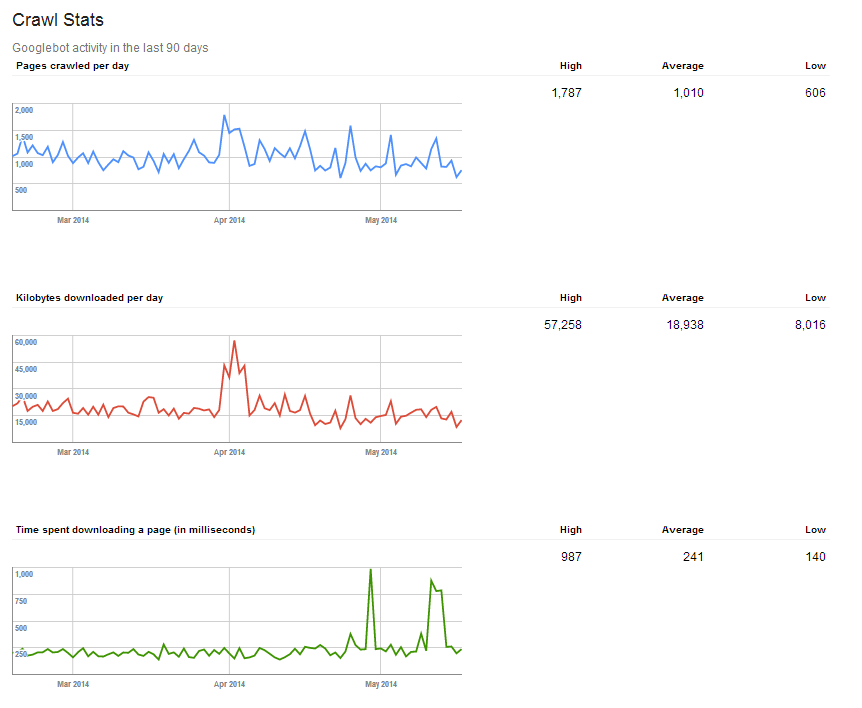

Crawl Stats

The data in the Crawl Stats may not be as rich as server log file data, but it’s better than not looking at any spider activity reports at all.

Crawl Stats has pretty volatile graphs, but do look for big, weird spikes and distinct trends. For example, Crawl Stats can tell you:

- If you have increase in # of pages sucking crawl budget ― if pages crawled goes up, but kilobytes downloaded does not,

- If page load times suck crawl budget ― time spent downloading a page goes up and # of pages crawled goes down, or

- If crawl budget increases/decreases ― kilobytes downloaded per day will trend, and pages crawled will likely follow.

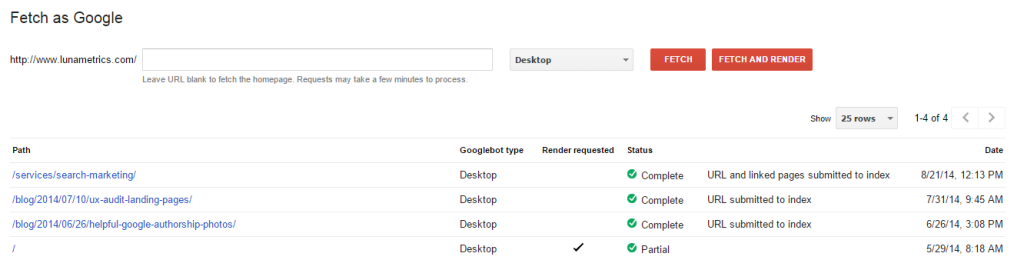

Fetch as Google

GWT help articles here.

Ensure Google can read your page

Until October 2014, I didn’t use Fetch as Google nearly as much as I should. Then Google’s Pierre Far explained to me at PubCon that tools like seo-browser.com did not reliably show what Google can see with total accurately.

In my opinion, it’s wise to second-guess some Fetch as Google results as I don’t feel it always paints the full picture on SEO readability, but I certainly would not audit a site without Fetch as Google.

Fetch as Google is an essential tool in making sure your pages are SEO-friendly (or at least Google-friendly). I recommend requesting a Fetch and Render on every template you have and every critical SEO landing page.

If a page’s status is not “complete”, then you need to analyze the page to see if all important content is Google-readable. Google has a list of every Fetch & Render status and its description here.

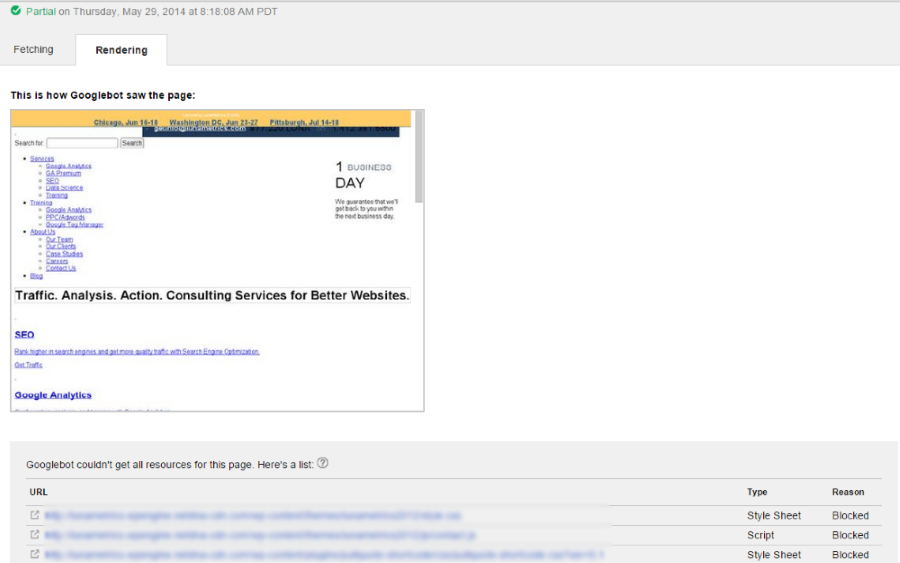

Partial Render

The screenshot above shows a page that couldn’t be fully digested by Google due to robots.txt file blocking several scripts. Using Fetch as Google on many sites as showed me just how often this happens. (Incidentally, Pierre Far also told conference attendees that the biggest SEO error he sees is accidentally blocking Google from crawling all of your website.)

GWT’s robots.txt tester can be used to see if a URI is blocked by robots.txt. However, I prefer this robots.txt tester, because you can analyze multiple URLs.

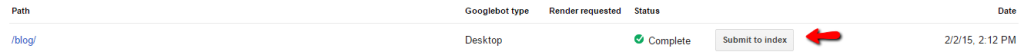

Submit URLs for indexation

The other use of Fetch as Google is to tell Google you want them to crawl a URL and include it in its’ index of URLs eligible for inclusion in search engine results pages.

When you hit “Submit to index”, Google gives you an option to ask it to crawl just the page submitted or that page and all the links on that page.

Fetch as Google is no a replacement for best practices for crawl-friendliness (like good robots.txt, minimal duplicate content, ping plugins, Sitemaps, and good internal linking.)

However, Fetch should often be used for site upgrades, URL migrations, breaking important news, and launching batches of new content.

Note that hitting “Submit to index” does not guarantee a URL will get indexed, but does help get content in the SERPs faster.

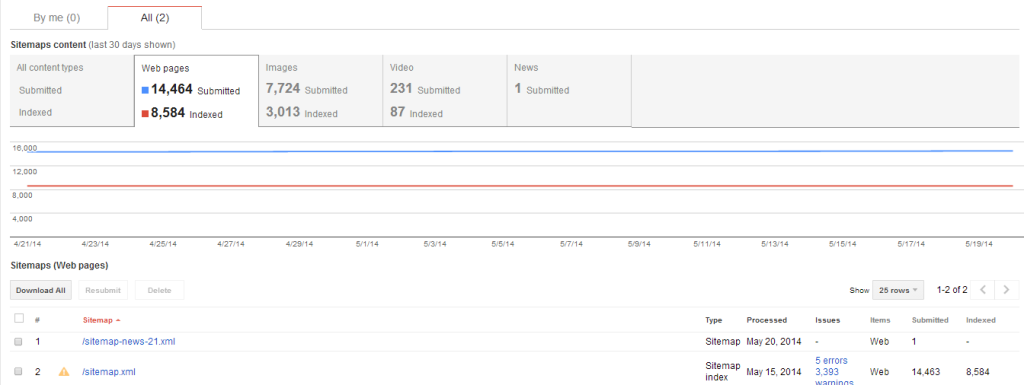

Sitemaps

These are the kind of Sitemap numbers you look into.

An XML Sitemap(s) is an opportunity to tell Google and the other search engines what pages on your site you want to be crawled and indexed. For large site or sites with frequently updated content, a Sitemap is pretty important. The search engines don’t guarantee it will abide by the Sitemap, but anecdotal evidence has proven time and time again that XML Sitemaps help increase the chance your pages are found and found fast (especially if the Sitemap is up-to-date and “clean”).

Sitemaps can get tricky — especially when you have a large site or when you use special Sitemaps for images, video, news, mobile, or source code. To ensure you’re doing your Sitemaps right and getting the most of them, always submit them with GWT’s Sitemap feature.

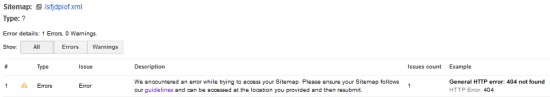

It is recommended that you always validate your Sitemaps before going live. And what better way to validate than through the eyes of Google? Simply click the big red “Add/Test” button and test away.

Once you’ve submitted a valid sitemap to Google, you should not ignore it, however.

Check in regularly to see if there are any errors or warnings. Often, a sitemap error will reveal a larger problem with your site. Here is the list of possible Sitemap errors.

In addition, pay attention to the number of URLs (or images, videos, etc..) indexed versus the number of URLs or items submitted. It is not uncommon for there to be a discrepancy here, but one of your SEO goals is to get the search engines to index everything you want indexed.

The tricky part is seeing which pages are not indexed (in fact, this topic could warrant its own article), but this may be possible with Google site search and Analytics landing page reports. It’s very time consuming manually, but can be automated with technical hacks.

If the pages not indexed are important to you, there are a few things you can do to improve indexation. For example, you could add or adjust tags in Sitemap: the <priority> tag tells the search engine how important a URL is, and the <changefreq> tag indicates how frequently the page is updated (for example with links to new pages). Also, unindexed pages may be a red flag that those pages lack inbound links or lack content perceived by engines to be unique.

On a related note, I wrote about building Sitemaps here.

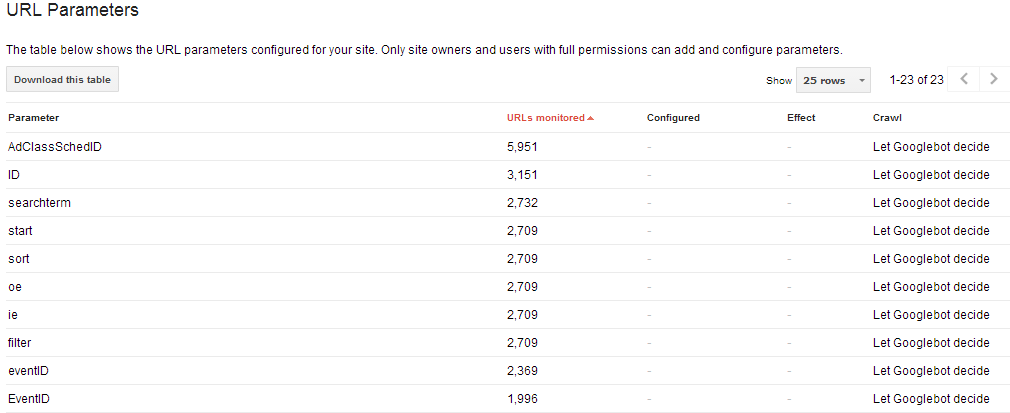

URL Parameters

GWT help article here.

When you first go into this report, you’ll see a doorway page that states “Use this feature only if you’re sure how parameters work. Incorrectly excluding URLs could result in many pages disappearing from search.” Heed that warning and don’t change the parameter settings unless you know what you’re doing.

But even if you kinda don’t, there are some useful data in here.

When I’m doing an SEO audit, I like to look at the most commonly used parameters, see h0w the site is using them, see if they’re tied to un-informative URL names, and see if any are causing duplicate content or being a major drag on crawl budget. Don’t worry about utm parameters, which are related to Google Analytics and well understood by Google.

One way to find the URLs with the parameter is to Google “inurl:?yourparameter= site:yoursite.com”. This will give you an indication of which parameters are getting indexed. The other method is to look for the parameters in the results of a site crawl.

This analysis may lead you to identify non-canonical URLs; if so, you’ll want to apply duplicate content fixes. You can also configure the parameters in GWT, but this typically should only be a band-aid instead of a permanent fix, and (as noted) should always be done with much caution.

Endnotes

This guide was last updated at noon February 9, 2015. The guide was originally published in March of 2014. Sections with notable updates since then include Links to Your Site and Search Queries. New sections include Change of Address, Mobile Usability, and Fetch as Google.

I really hope this Webmaster Tools guide is useful to you. GWT really is incredibly powerful and underutilized. My favorite reports are Index Status, Sitemaps, Fetch as Google, and ― of course ― Search Queries. What are yours?

Are there any reports or functions of GWT you’d like me to cover? Got a hot Webmaster Tools tip you want to share? Leave a comment. We’ll continue to try to update and improve this guide in the future.